All of the source code for the listed projects can be viewed on my Github profile or you can find a link to each individual repository at the bottom of each project.

Personal Projects

1. Vulkan Deferred Renderer

C++ Vulkan GLSL C++ Dear ImGui Rendering GUI Shaders

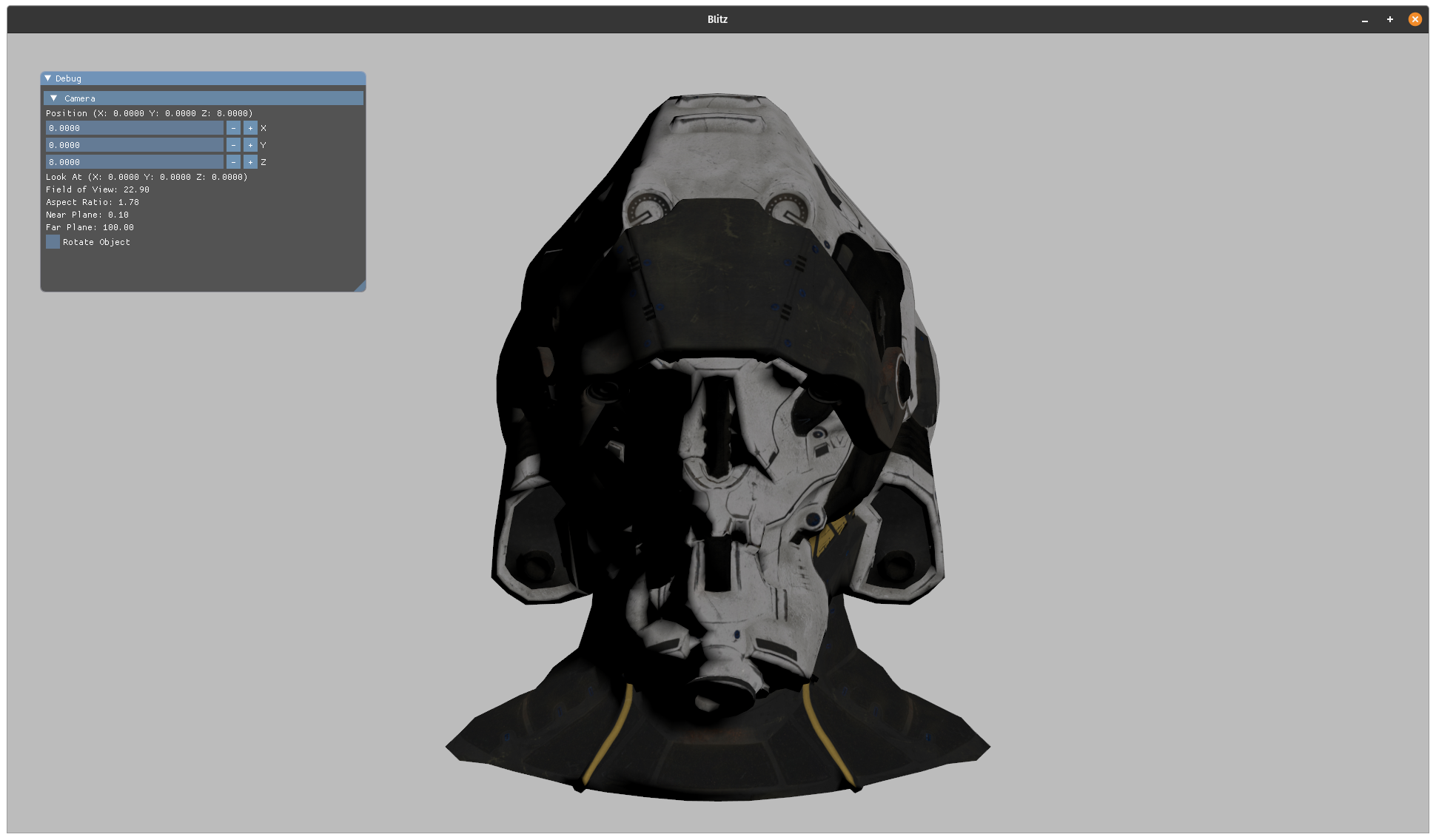

A snapshot of a model loaded with the renderer

I attempted to build out a deferred renderer using Vulkan and Dear ImGui. My intent was to improve my knowledge around Vulkan and realtime rendering, while also providing a way for others to see what it’s like building a renderer from scratch. I have mostly abandoned this project now, but I am intending to repurpose it for an upcoming project, which I will provide more information in future blog posts.

The source code to the basic setup has been published here.

2. Odin - A Vulkan-based Path Tracer

C++ GLSL Vulkan Realtime Rendering Shaders

This project was my first step into getting my feet wet with the Vulkan API. When NVIDIA announced their first GPU series featuring the Turing architecture, I decided to test if it was possible to write a real-time raytracing application without a dedicated GPU.

The renderer uses a single compute shader to perform the path tracing algorithm and write the result into a texture image which can be sampled from the graphics pipeline. It also sports the following features:

- Support for diffuse, caustic, and dielectric materials

- Tracing of geometry serialized in the OBJ file format

- A basic Bounding Volume Hierarchy using axis-aligned bounding boxes

Overall, the implementation is still quite bottlenecked due to the fact that there are a lot of branching instructions, which only lets the renderer run at around 10-20 FPS on my hardware. If I could spend some time optimizing my shader code, I could envision the renderer having more practical applications.

The source code can be found here.

3. Raytracing In One Weekend In Rust

Rust Raytracing

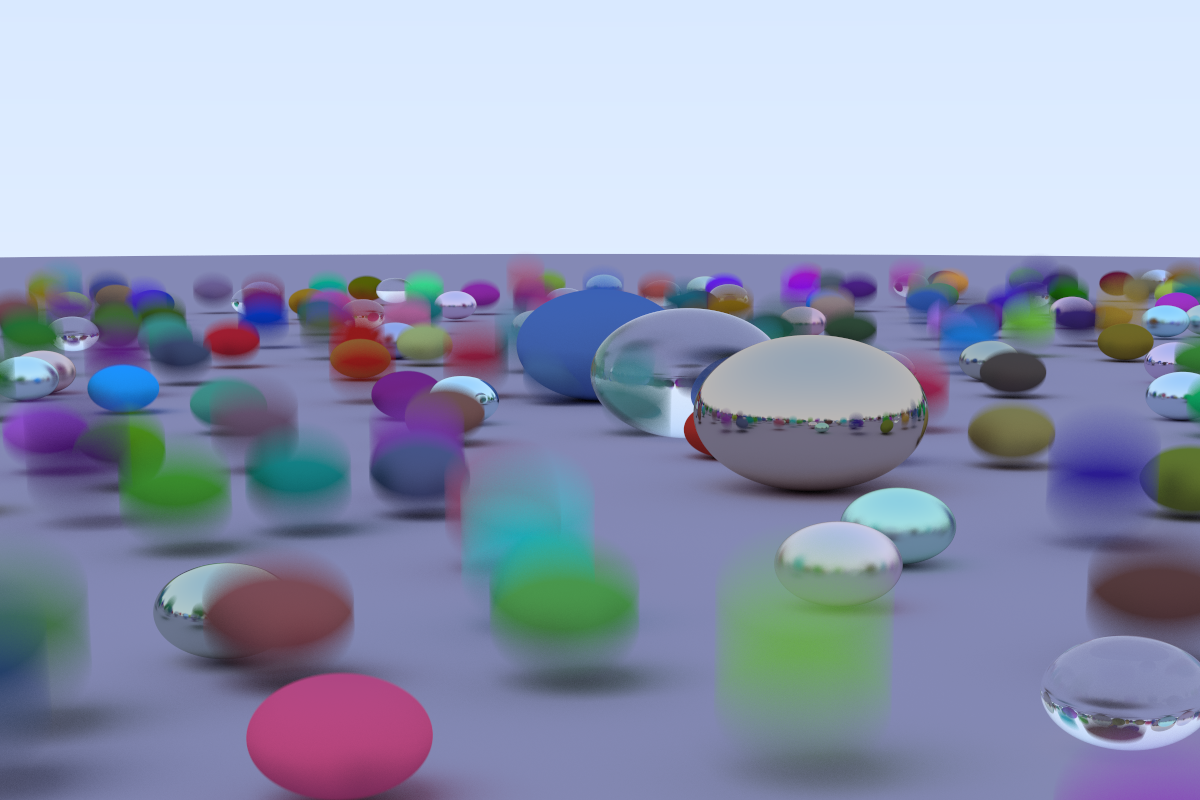

An image created with the Rust path tracer at 1200x800 resolution and 1024spp

As a challenge to myself to learn Rust, I decided to adapt Peter Shirley’s Raytracing In One Weekend tutorial, which is written in C++, into a Rust application. All of the features from the first book are available including some extras:

- OBJ model parsing or randomized scene creation

- Support for configurable dielectric, diffuse, and caustic materials

- Motion blur

- Checkered textures support

- Configuring of rendering parameters through command line arguments

- Multithreaded rendering through the use of Rust’s

rayonlibrary

The source code can be found here.

Academic Projects

1. Master’s Thesis On Differentiable Rendering

C++ Python Differentiable Rendering Machine Learning

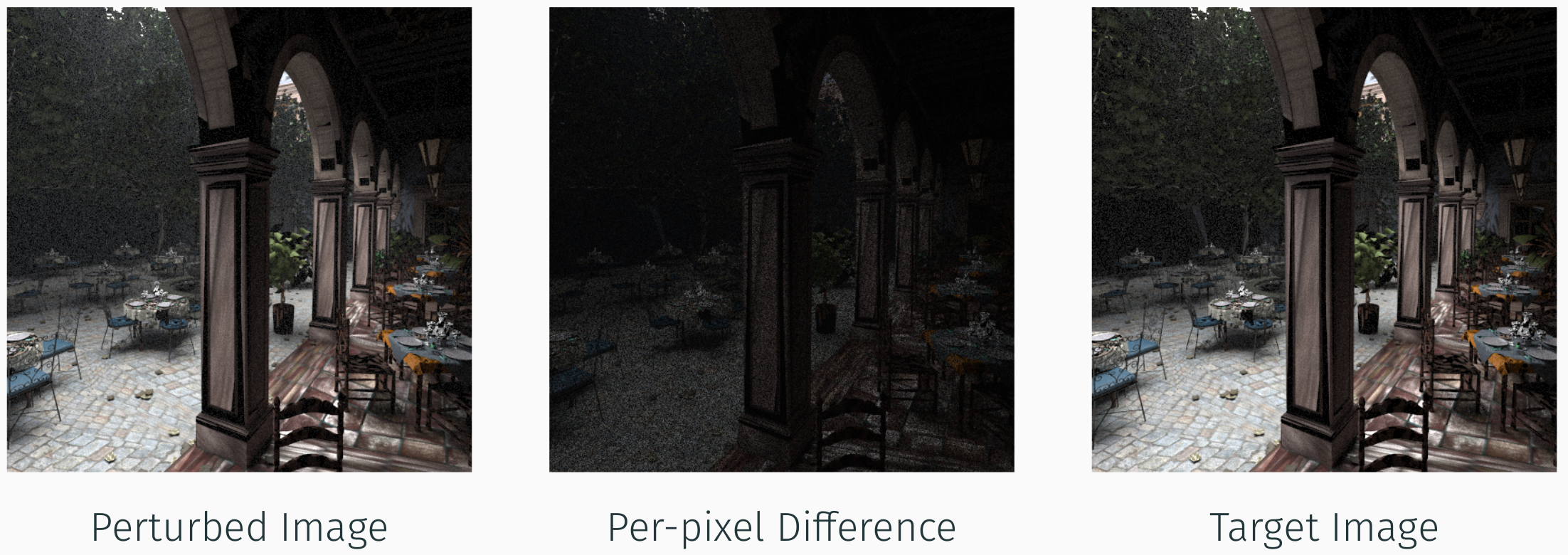

Differentiable rendering tries to minimize the loss between two images by applying gradient descent

For my Master’s thesis I decided to explore differentiable rendering, which combines machine learning with computer graphics. For a good explanation on the topic I encourage you to watch Wenzel Jakob’s Keynote Speech during the High Performance Graphics 2020 conference.

In my case, I chose to extend the capabilities of the differentiable renderer redner, which was

introduced by Li et al. in the paper Differentiable

Monte Carlo Ray Tracing through Edge Sampling,

to be able to optimize homogeneous participating media.

The figure above shows one of the test setups that was used to verify the code that I had written. The perturbed scene on the left-hand side is enveloped in a thick fog and the goal of the test run was to remove the fog entirely by optimizing the scene towards the target image on the right-hand side.

The result of running the gradient descent optimization can be seen in the figure below. The fog has been nearly removed from the perturbed image, with some visual artifacts remaining in the tree line in the background.

A capture of the results from optimizing homogeneous participating media through redner

For more details on my thesis please read through my thesis presentation or contact me directly for a copy of the full thesis. The source code can be found here.

3. Computer Graphics 2 (TU Berlin)

OpenGL Qt5 Data Structures Surface Reconstruction Implicit Surfaces

The Computer Graphics 2 course taught a number of different techniques focused around geometric reconstruction and implicit surfaces. All of the assignments focused on a particular topic within those domains and were completed in collaboration with two group members. For the coding portions of the assignments we used OpenGL to perform our graphics computations, with Qt5 acting as the framework for the demos’ user interface. Qt5 allowed us to dynamically adjust the parameters for the various demonstrations. What follows are some of the highlights of the course.

K-d Trees With Nearest Neighbor Point Search

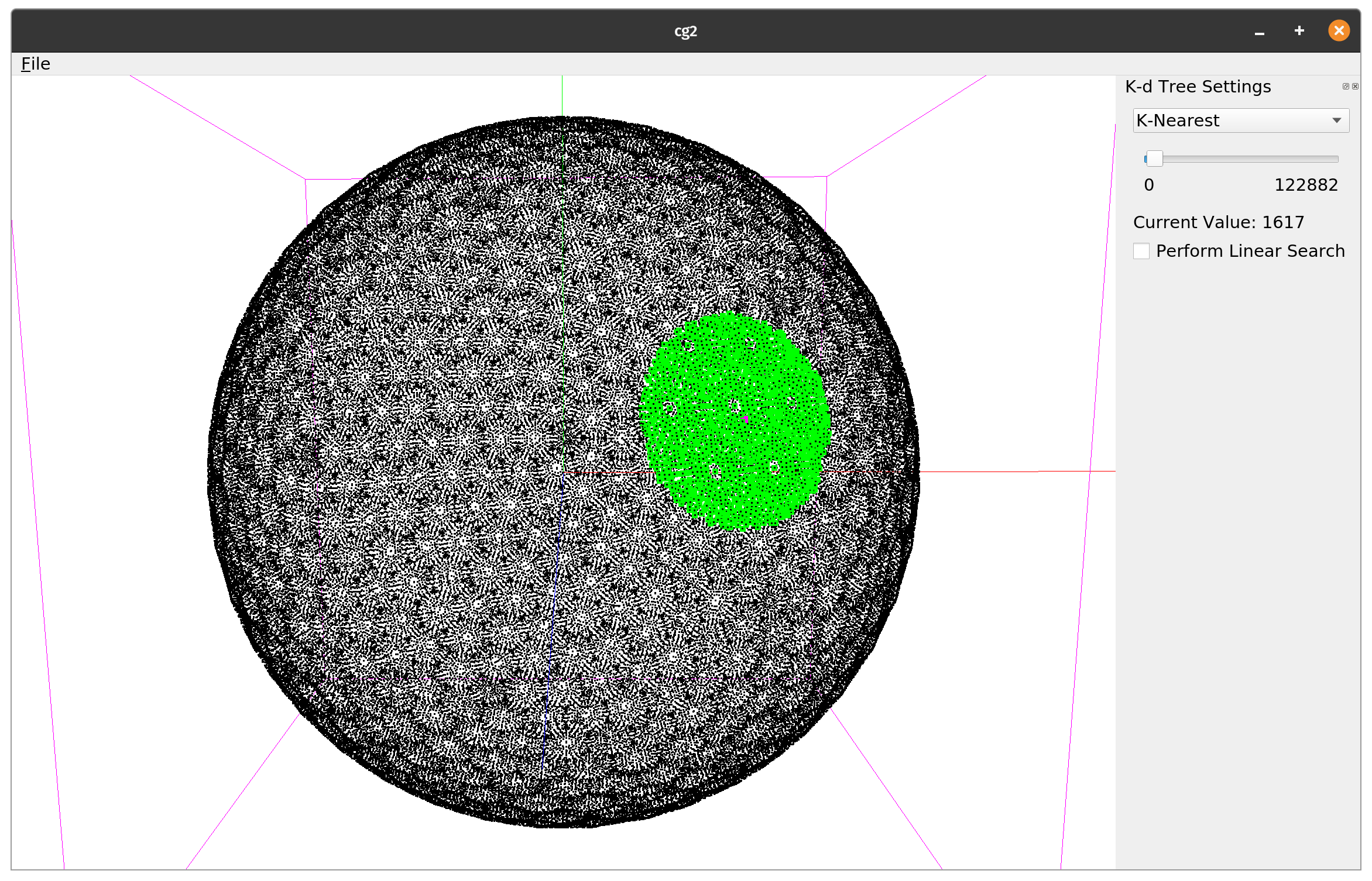

The k-d tree data structure being used to perform k-nearest neighbor search on a point cloud

This assignment revolved around building a k-d tree data structure, which acts as a binary tree data structure for k-dimensional point sets. This is accomplished by partitioning the k-dimensional (k=3 in our case) spatial domain into a half-space. To find a dividing plane for the half-space, the points in our data sets were sorted using a quicksort algorithm, which I implemented from scratch for efficient sorting.

The k-d tree was then used in our application to implement a N-Nearest Neighbor Search algorithm to collect an arbitrary set of points near a point in space. The image above shows how a user can select a point in the data set, and the nearest neighbor search then gathers of a preset amount of points in its neighborhood. The selected point is highlighted in pink and the n-closest points (N=1617) are shown in green.

Surface Reconstruction Using Marching Cubes

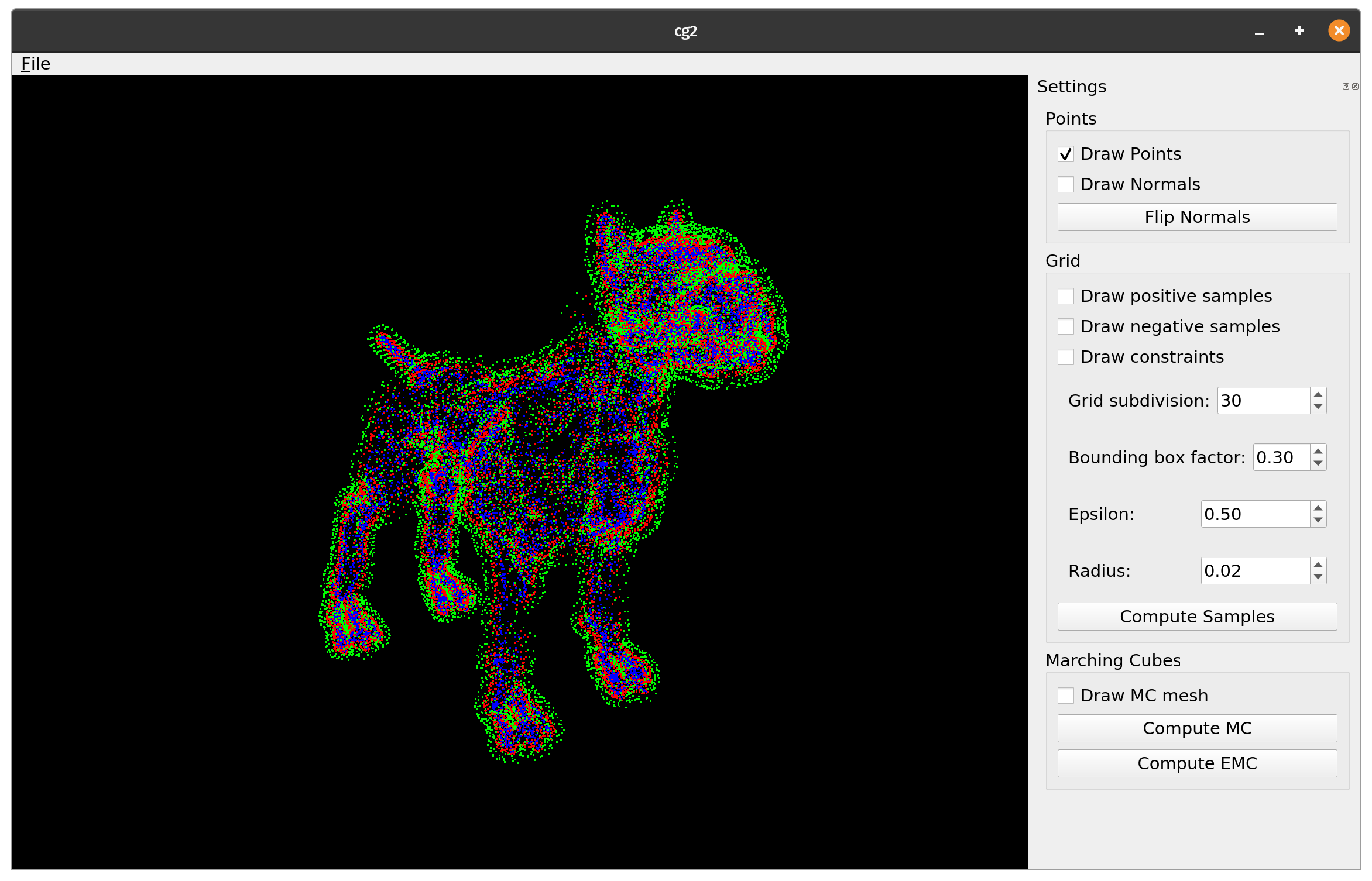

The model in this picture is represented by a point cloud implicit surface implementation

This assignment focused on surface reconstruction using the marching cubes algorithm. From the implicit surface representation, shown in the above image, a 3D cube is marched along an adjustable fixed grid. At each step, we test what parts of the cube’s surface is inside or outside of the volume by intersecting a set of 256 precomputed triangle configurations with the surface. If an intersection is found, a triangle is constructed through the three intersection points of the cube with the surface and added to a mesh data structure.

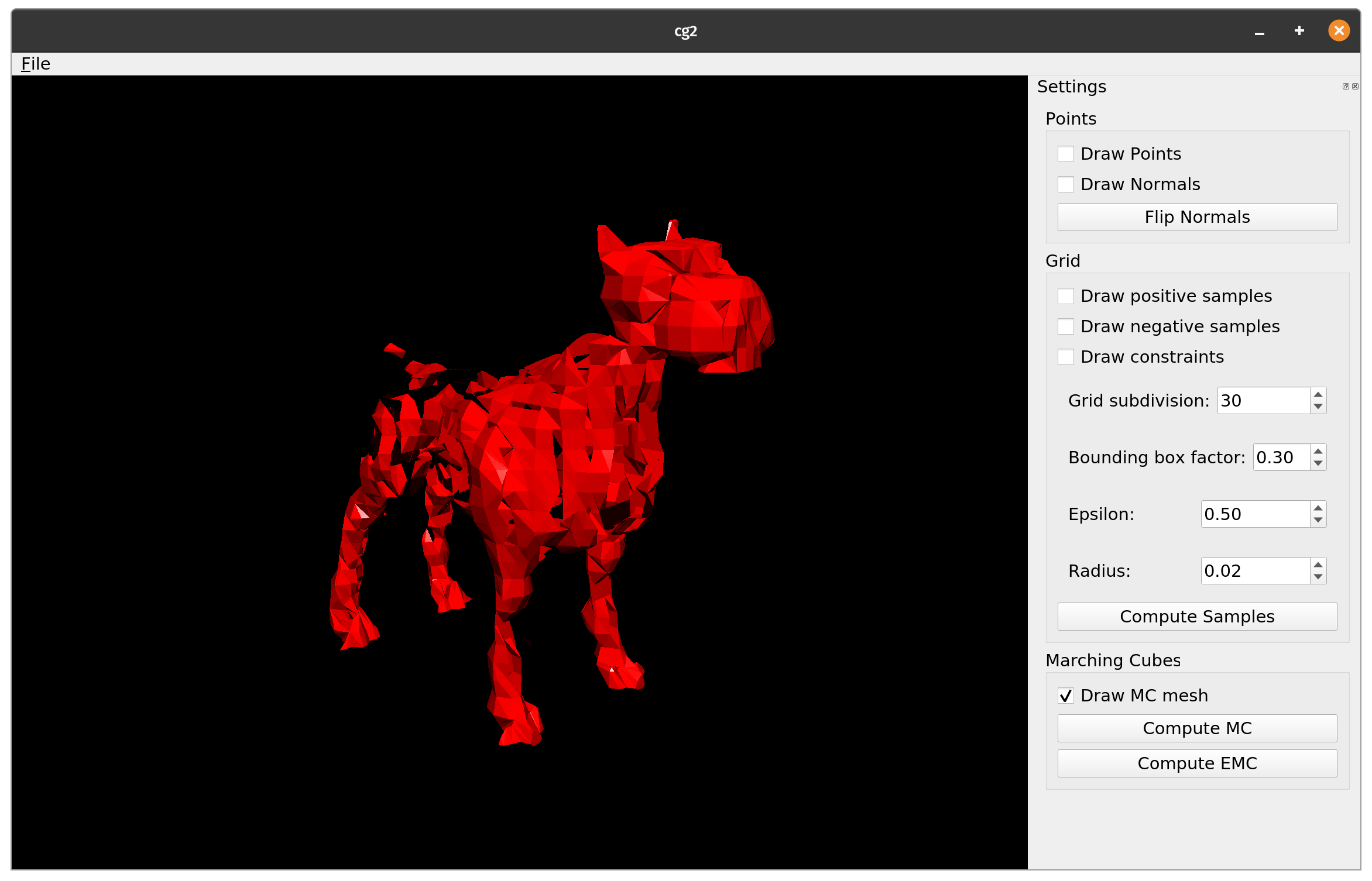

The reconstructed surface model through the use of a Marching Cubes algorithm

One such mesh, which was created after one run of the marching cubes algorithm, can be seen above. Although the mesh is not a perfect representation of the surface, it is possible to create finer approximations by adjusting the grid subdivision and tuning the nearest neighbor search parameters.

The source code for these projects can be found here.

4. Computer Graphics 1 (TU Berlin)

JavaScript WebGL Shaders Scene Graphs

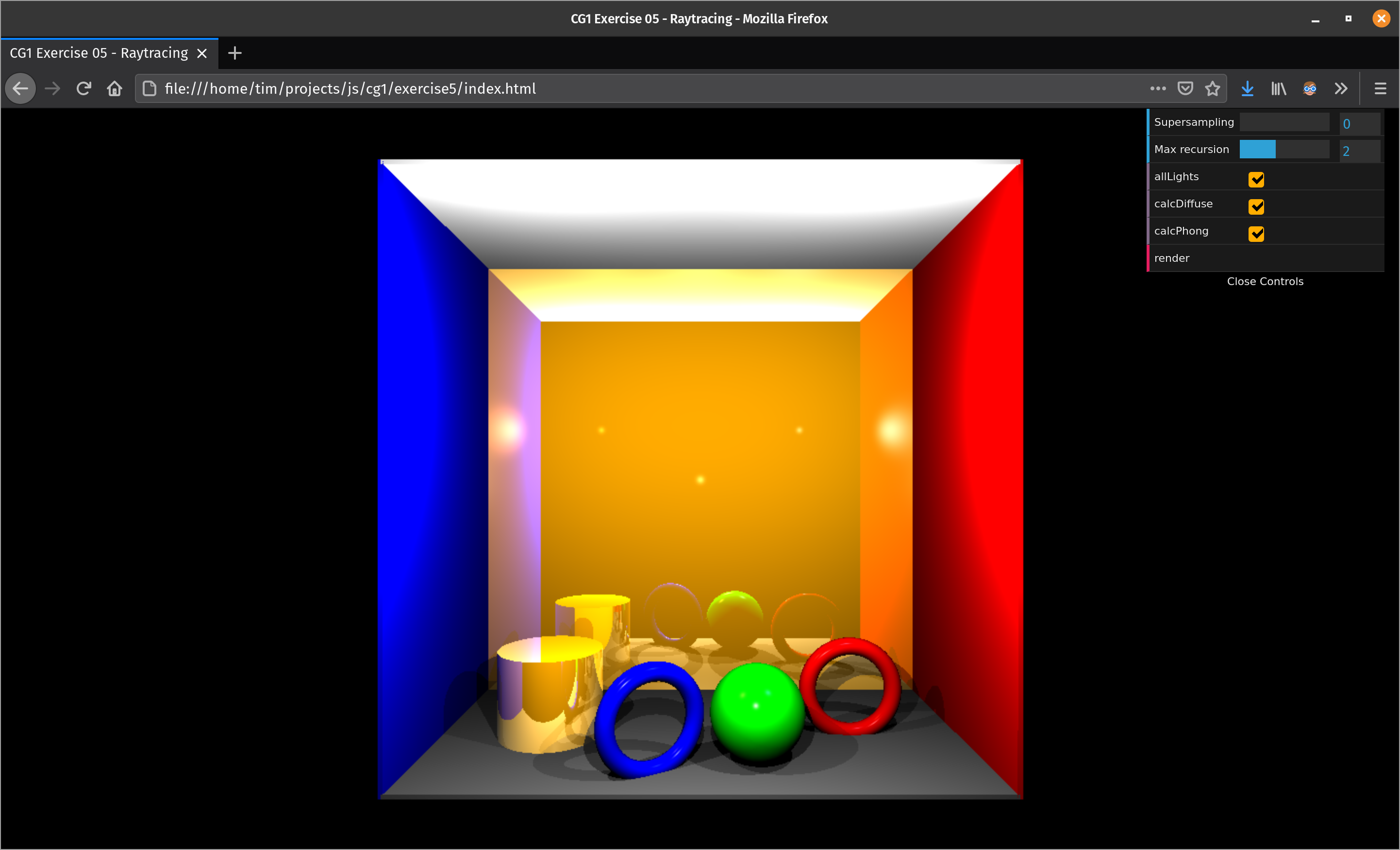

A WebGL ray tracer that I wrote as part of my studies in CG1

The very first course that I took for computer graphics was the CG 1 course taught at the Technical University Berlin. This was an introductory course which covered a classical forward rendering pipeline from front to back. Among the topics covered were:

- Scene graph representations and linear transformations

- Camera projections

- Texture mapping

- Various shading techniques (Gourad, Phong, etc.)

- Color theory

The final assignment for the course is what convinced me to focus on rendering. The assignment let us write a ray tracer in WebGL which produced the output image seen above. The simplicity of the ray tracing algorithm, along with the stunning output it provides drove me to learn more about the subject and soon enough I was deep into the rabbit hole on rendering.

The source code for the ray tracer as well as the other projects can be found here.

Conference Papers

EDBT 2019 Demonstration Paper Publication

C++ Scientific Writing IoT Sensor Networks

During my time as a Student Research Assistant with the Database Systems and Information Management Group at the Technical University in Berlin, I got the chance to work on a demonstration paper that was presented at the Extending Database Technology conference in 2019. The work was focused on recording and replaying sensor data on IoT devices. The abstract for the paper has been cited below:

As the scientific interest in the Internet of Things (IoT) continues to grow, emulating IoT infrastructure involving a large number of heterogeneous sensors plays a crucial role. Existing research on emulating sensors is often tailored to specific hardware and/or software, which makes it difficult to reproduce and extend. In this paper we show how to emulate different kinds of sensors in a unified way that makes the downstream application agnostic as to whether the sensor data is acquired from real sensors is read from memory using emulated sensors. We propose the Resense framework that allows for replaying sensor data using emulated sensors and provides an easy-to-use software for setting up and executing IoT experiments involving a large number of heterogeneous sensors. We demonstrate various aspects of Resense in the context of a sports analytics application using real-world sensor data and a set of Raspberry Pis.

– Resense: Transparent Record and Replay of Sensor Data in the Internet of Things

The full paper can be read here.